UNIT: Dedmen, Programmer, Programming

TO: Arma 3 Users

OPSUM: An analysis of recent multithreading improvements for a smoother game experience

SITREP

Welcome to this technical deep dive into Arma 3's performance optimizations in update 2.20. Before handing you over to this blog's actual author, I wanted to share a few words on the state of Live Ops. Yes, REPception is now a thing.

Some 12 years since its initial release, we still find ourselves releasing free platform updates to the game. This is something we can hardly believe ourselves, and we think you'll agree that it's a splendid situation! Thanks to the continued support from the game's community and Bohemia Interactive leadership, we're still in a position to offer some limited Live Ops development. The hands-on team can be counted on one hand now, but we should not forget it takes a bigger support network to keep things flowing smoothly. Publishing, Quality Assurance, Localization, Legal, IT, and more teams and individuals have a hand in getting these updates out. Meanwhile, some of our longer-term plans, as outlined in older SITREPs, are still on our list. Their roll-out has been slower than anticipated, but that's in part due to it just not being necessary to switch to the next phase of Arma 3's life cycle. Even now that we're steadily traveling the road towards Arma 4, there is still plenty of life left in our pinnacle Real Virtuality (RV) title.

Those of you who are on our Discord server (#perf_prof_branch channel) may have been following along with the optimizations now released to main branch in 2.20. You may have already enjoyed Programmer "Dedmen"'s incredible monologues on specific issues, solutions, fixes, and a myriad of other RV topics. You may have also wondered why these optimizations only arrive now. There are many reasons for that, some of which Dedmen will outline below. It's hard to compare the prime-time development circumstances a decade ago to how things are now. There are effectively no hard deadlines for Arma 3 anymore, so there is ample time to investigate and experiment. There also aren't hundreds of commits from tens of people every day, with a constant stream of new features arriving and bugs being squashed. We are building upon the work of legends, going all the way back to the first lines of code for RV by Ondřej Španěl.

Nonetheless, I want to give massive kudos to the incredible work by our talented Dedmen. He has accomplished low-level and risky changes that I would have never anticipated at this stage. They will not magically give everyone the same performance boost across the board, but don't just stare blindly at the numbers. Play the game. Most everyone who's tried the update has said the game feels smoother. Thank you for the passionate and hard work, Dedmen. And a big thanks to everybody who has helped us test these changes via Profiling branch as well. Your feedback has been very important in testing under real-world conditions.

Enough from me, let's finally get to the meat of this Operations Report.

- Joris-Jan van 't Land, Project Lead

FRAMES

First of all, we did not "add" multithreading in update 2.20. The RV engine has had multithreading since Arma 2. But we did overhaul all of our multithreading code and made some optimizations that are further detailed below.

The main focus of our optimizations was to fix lag spikes and raise the minimum Frames per Second (FPS). The maximum FPS may not actually be noticeably higher, and in some cases (at above 100 FPS) it may even be slightly lower than before. But the gameplay should still feel significantly smoother.

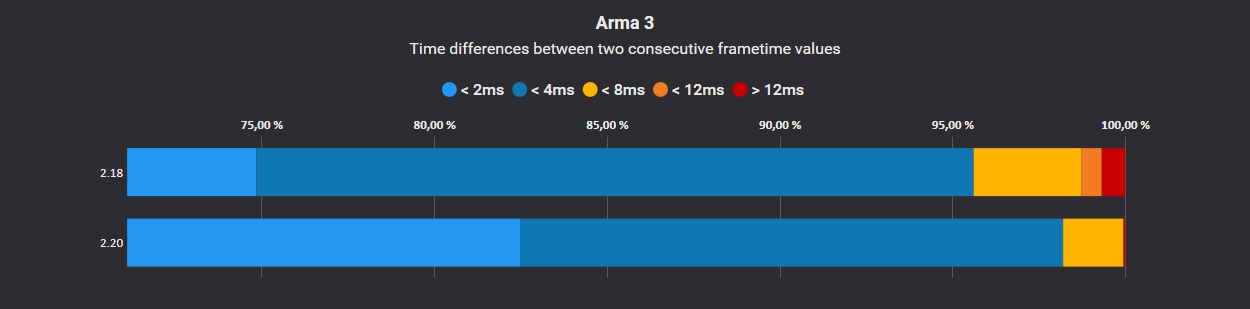

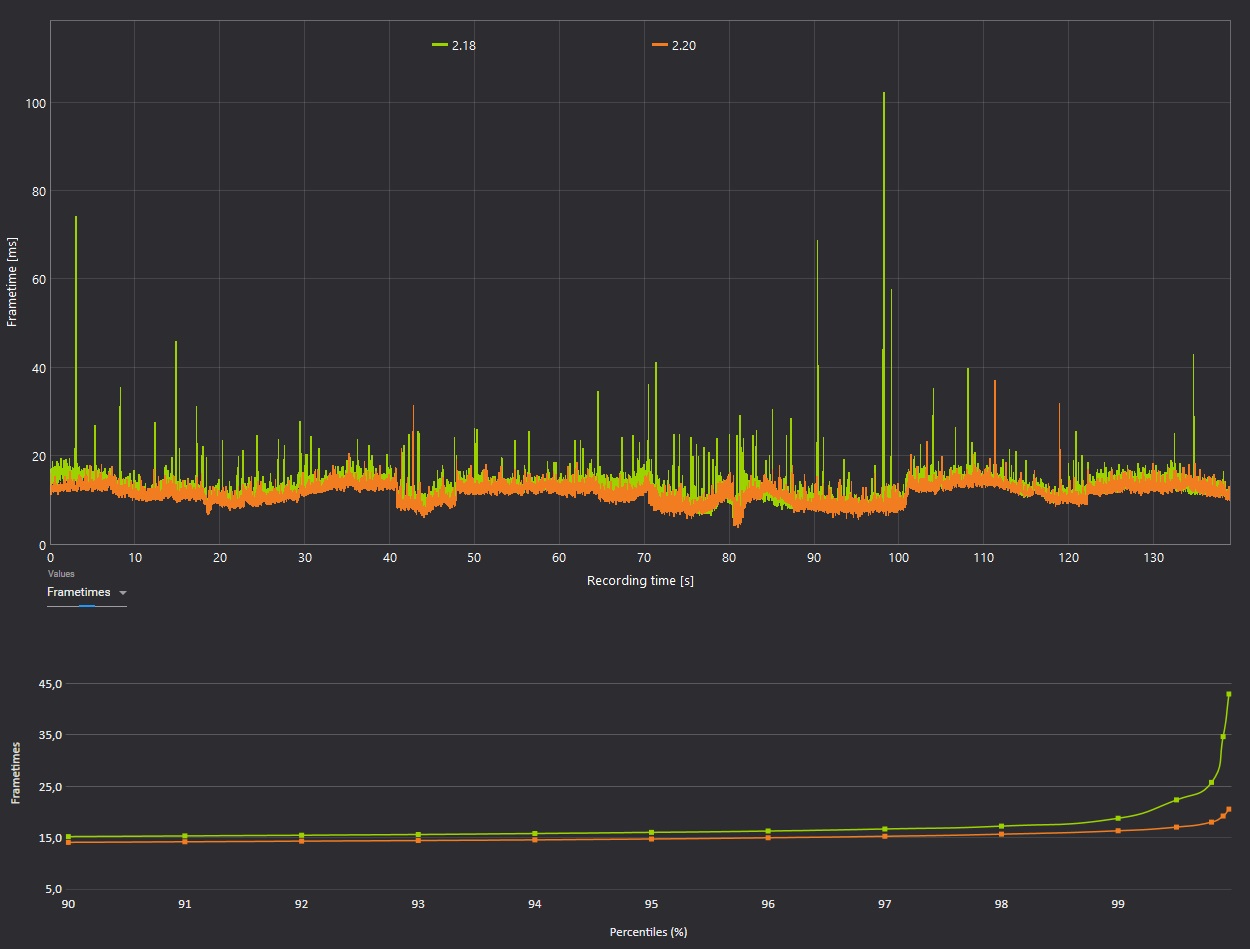

This is also visible in these graphs recorded using CapFrameX on the excellent Yet Another Arma Benchmark (YAAB) by Sams. The frame times should feel more consistent, with fewer spikes:

The average FPS, meanwhile, has only received a minor bump. And that bump is expected to be even less when mods get involved.

COMMAND-LINEAGE

From the feedback on Profiling branch we have seen several times that players had issues caused by setting wrong command-line parameters. That was typically because an old optimization guide told them it would make their game run better. But now that the game is more multithreaded than it used to be, bad settings have a more noticeable and potentially negative impact.

If you are setting custom parameters, please verify that you understand them and you are setting them correctly. Here are some guidelines:

- Only use the -cpuCount parameter if you want to intentionally limit how many CPU cores the game can use. The engine can already automatically detect how many cores you have, so you do not need to tell it that.

- The -enableHT parameter has no effect if -cpuCount is set, so setting both is wrong. Our tests have shown that -enableHT performs worse, but it may depend on your hardware. You can use YAAB to test if it actually helps in your case.

- The -exThreads parameter should not be used. If you have a 4 core CPU or better, all of them are already enabled by default. And if you have fewer than 4 cores, enabling more exThreads is more likely to cause harm than to be helpful.

- If you are using an external tool to adjust the "Process Affinity", which reduces the number of CPU cores the game can access, you need to use -cpuCount to inform the game about how many cores it can actually use.

SYSTEM REQUIREMENTS

There is one more bit of housekeeping to get out of the way. Update 2.20 will be the last version of Arma 3 supporting its 32-bit application to a reasonable degree. We will no longer maintain both builds, and will focus any future efforts purely on 64-bit. The vast majority of Steam users and Arma 3 players have long since switched over, and we hope everyone else will too, especially those who may be unintentionally using the 32-bit version. We will keep a frozen 2.20 legacy branch available on Steam for those who really need it, but it will unfortunately not be possible for it to be forward-compatible (no multiplayer with newer versions). Our motivation for doing this is simply that it has been increasingly restrictive to maintain 32-bit compatibility. It has restricted the usage of newer C++ language features, certain optimizations, etc. Deprecating support will save our very small remaining Live Ops team valuable time, and it will unlock some more optimization opportunities. In a similar vein, we will also drop support for Windows 7 and 8, just like Steam has already done for its client.

To reflect the above, as well as the hardware advancements and availability over the past decade, we have finally refreshed Arma 3's minimum and recommended system requirements (as seen at the bottom of the Steam store page f.e.). While the original minimum settings were validated to run the game, we now raised them to a level that is more realistic for an entry-level experience. The recommended requirements meanwhile were bumped a bit more, so that such configurations should actually run the game well. That said, it’s possible that setups lower than the new minimum still somehow run the game, but we hope this is a better reflection of the current state of Arma 3 for new purchasers.

OLD VERSUS NEW

To understand what is new since update 2.20, we first need to understand how the game has worked in the past.

The core of multithreading is the so-called "job system". Its purpose is to take our tasks (jobs) and to distribute them over all the CPU cores we have available, so they can run in parallel.

The design of the job system decides how we are able to utilize it, and the old design (in use since Arma 2) worked, but it was quite primitive. It only allowed submitting jobs as one block, and there could only be one block active at a time. This means all jobs had to be submitted at once, after which we needed to wait for the jobs to be finished, before we could continue doing other things.

This is also called "Fork-Join".

The system worked fine in bulk processing, for example for particle effect simulation. We can split 1000 particles into five jobs, and run them in parallel, each thread handling 200 particles.

This style of multithreading was used in quite a few places, and if we just look at these particle effects in isolation, then this solution seems perfect. We now get the same particles done, in 1/5th of the time. That is already great, but can we do better?

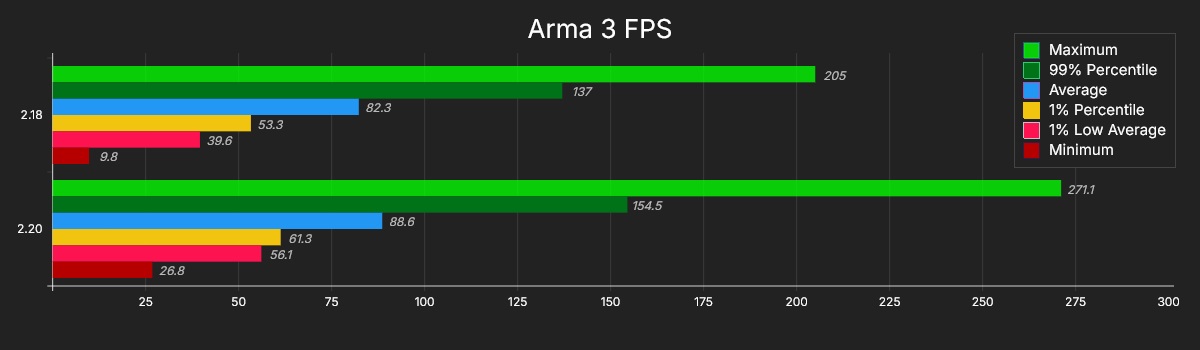

Game engines rarely do just one thing. If we look at what surrounds the particle simulation as a case study, we get to see the issue with this approach. The actual code around it does the following tasks:

- Simulate sounds

- Update wind emitters (helicopter blades cause wind vortices, which influence particles)

- Simulate "safe" particles (these can run in parallel with each other)

- Simulate "scripted" particles (these execute scripts, which we cannot do in parallel; I will explain the reasons for this below)

- Delete expired particles (after all simulation is done)

- Simulate Artificial Intelligence (AI)

- Present the previous frame (that we have rendered in parallel) to the GPU

In 2.18 and prior, that would look like this:

Our particles are nicely parallelized, though things are not evenly distributed. Because we have to cut up the tasks beforehand, balancing them perfectly does not always work out. But there is quite a lot of empty space where our extra CPU cores are just waiting without doing any work for us. We do not want to waste this time.

To find out how we can better distribute the work, we need to look at the constraints that apply to our tasks. As we touched upon above, scripted particles cannot run in parallel, and we can only delete expired particles after the simulation is done. Our other tasks also have similar constraints with regards to when they can run in relation to each other.

- Sounds do not care about particles, but they cannot run in parallel to AI.

- "Safe" particles can run in parallel to each other, and must be finished before deleting expired particles.

- "Scripted" particles cannot run in parallel to each other, but the whole task could run in parallel to the safe particles, and it must be finished before deleting expired particles. It also cannot run in parallel to sound or AI.

- AI cannot run in parallel to sounds or scripted particles. It does not care about other particles (AI vision is done elsewhere).

- Presenting the frame is not related to any of this. But it must be done only on the main thread, and it must be finished before we continue.

Now we can try to puzzle these tasks together, in a way that we distribute them across as many cores as possible, while also keeping all of their constraints in check. We could, for example, run sounds and "safe" particles together on the worker threads. And as soon as sounds are done, we can run either "scripted" particles or AI. Meanwhile, we can present the frame on the main thread, and then delete expired particles once all particles are done.

But this is where our old job system blocks us, since we cannot run different kinds of tasks in parallel. We also cannot add some job into the mix while another is running. We also cannot do something different on the main thread while the jobs are running. And it’s not just about particles; the same kind of problem appears in other places. Something as simple as "run this task in parallel, do something else, then later grab its result" was not doable. If we wanted to solve this problem, we needed a new solution.

There are quite a few existing libraries, like Taskflow or enkiTS, but in the end we decided to go with Enfusion's system. This is a job system our engine team had already developed in-house for new games like Arma Reforger and Arma 4. It does the things we need, so how cool is it that Arma 3 can still benefit from such technology!

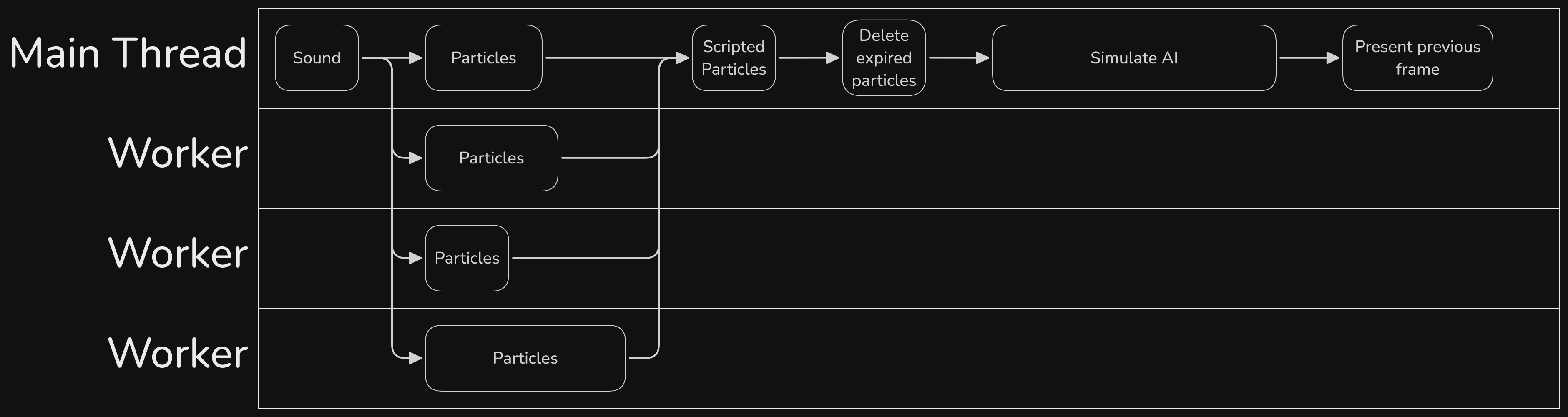

The Enfusion job system is graph-based. So instead of providing a block of tasks, we can set up a graph of connected tasks. This lets us puzzle together our tasks like described above.

If we give this graph to the job system, and execute it, we will see something like this:

All of the task blocks and the gaps between blocks are the same as before. Because the task system now knows all the dependencies, it can grab the next task to work on whenever it has some free time. We did the same work, but utilized more of our CPU cores, and we got done with it in about half the time.

Previously, our multithreading capabilities were just being able to cut up one block of work into chunks and running them in parallel. By utilizing Enfusion's system, we can now take whole blocks of work, and mesh them together like a giant puzzle.

We'll see in the next section how we can use this to multithread things that were previously not possible.

APPLYING NEW CAPABILITIES

Artificial Intelligence (AI) is a big topic for Arma. Players always want more of it, but they also do not want to pay the performance hit for it. While rendering and simulation are often quite stable, we see the biggest lag spikes happening in AI calculations. So, if we wanted to improve performance, we had to focus on that area.

The simple approach, one that we would love if it actually worked, would be to compute all AI groups in parallel. But sadly (or not, because this is what makes all the AI mods, which we love so much, possible), AI is running scripts. And we cannot parallelize that. Let’s take a look and try to figure out what we can do.

This is a typical AI simulation cycle with a few groups:

This is greatly simplified; there are a lot of steps missing, and I won’t explain most things here.

Like we did before with the particles, we again have to consider the constraints here.

- The Finite State Machine (FSM) updates cannot run in parallel.

- The "Scanning for potential targets" and "Preparing the Pathfinding Grid" tasks can.

- And the "Track Targets" task that every individual unit is doing, also cannot be parallel.

We cannot run the groups in parallel because the FSM updates would conflict. We cannot even run the units in that first group in parallel, because the “Track Targets” would conflict.

What we would love to do is to somehow pull out the multithreadable parts. Previously, that was not technically possible, but now we have new options. What we would need is a way to run through all groups, collect all the tasks, then run the tasks in parallel, and finally run through the groups a second time and feed them with the results of the tasks that we ran for them.

One way would be to split up the AI simulation into chunks, and then run through the groups multiple times. First only run the chunks that give us jobs, then do them, then run through the rest and provide the results. But due to how complex our AI is, that would take weeks of time, and it would be very likely we break something in the process.

The other way would be if we could somehow pause the group simulation in the middle, like scheduled scripts do. We'd launch the job and insert a 'waitUntil', and continue with the other groups. And that is actually a thing we can do. Bohemia Interactive is actually doing something like this in DayZ. That game has animation in the middle of simulation. Animations can run in parallel, but simulations cannot. It uses the same approach. It runs the simulation until it gets to the animation, starts the animation as a job to run on another CPU core, pauses the simulation and instead continues with the next simulation. It does this until it went a whole round through all simulations. Then it runs a second round. This time it fetches the job results (potentially waiting for it to finish still), applies the animation, and continues with the second half of simulation.

This magic is called "coroutines", and they are basically the same thing as scheduled scripts. They can be paused at any point, and let you continue with the next coroutine. And their second nice characteristic is that we can decide where to resume them, meaning we can move them between multiple CPU cores. This also allows for running the groups multithreaded. But then before the not multithreadable part, we can suspend them and continue them on only a single core.

To cut it short, this is how it would look (excluding Group 1 because it is special):

The more groups we have, the more work we can extract and parallelize.

For group 1 we are using a slightly different solution: we split all the units and actually run them in parallel directly. But then when we suspend them, we move them to the main thread to process the rest on there.

Now we would like it if we could run this scanning in parallel to the other groups being processed, like we did for the pathfinding preparations above. Why that ended up not working, I will explain more below.

Using these techniques:

- Job dependency graph to mesh tasks while also considering their constraints

- Coroutines to easily extract parallelizable chunks out of a bigger non-parallelizable task

- Multithreaded coroutines, for tasks that are mostly parallelizable, but only have a small chunk at the end that cannot be

We were able to improve quite a few areas of the engine. It is just a matter of finding all the spots.

Another example is explosions, which will do line intersects to all nearby objects to check if they should be hit by damage, or if they are behind cover that protects them. We saw this causing lag spikes. We first had to make line intersect safe to run in parallel, which required some changes to the way we process animations (which actually caused other problems, so we had to go through several iterations on Profiling branch to iron out all the issues). After that, we could run all these checks in parallel. However, again, it is not as simple as that. We do not only do a line intersect per object. It is once more a complex task, made from a mix of elements that are safe to multithread, and some that are not.

For each object, we do these steps:

- Check if the object is in range of the explosion (safe)

- Check if object has "HitExplosion" / "HitPart" Event Handlers, and if yes, then run them (not safe)

- Check line of sight to see if the object is behind cover (safe)

- If it is not behind cover, we apply damage and call the Explosion Event Handler (not safe)

But here we can apply the technique we developed for the group’s target scanning: multithreaded coroutines. Instead of moving back to the main thread at the end, we play a round of ping-pong, kicking the tasks back and forth between singlethreaded and multithreaded.

We start out multithreaded and do the range check. If the object does not have Event Handlers (which most objects don’t), we can just skip that part and continue to the line of sight check. And when the object is behind cover (which they often are) we are already done, and we were able to run this whole task without having to go back to being singlethreaded.

If the object has Event Handlers, and is not behind cover, we pay the cost of having to move it around. But even with that, because we can extract the expensive line of sight check, we still come out ahead.

MULTITHREADING OPPORTUNITIES

As we’ve seen in the examples above, it is often not as simple as it seems at first. The main factor of whether something can be multithreaded, is whether multiple threads would try to access the same data at the same time.

Scripts especially are a problem, because they can do anything and affect basically everything. We could multithread some script commands internally (nearObjects, lineIntersects, and some others), but the interaction between multiple script commands is where most of the time is spent. We did optimize scripts in general a bit over the last few years, but there is not much more left to be squeezed out of it.

For example, let's look again at the group simulation we mentioned above. Could we run Group 1 in parallel to all the others? Like this for example:

And yes, we "can". This did work in our tests, and we released it to Profiling branch for testing. But very shortly after that, the first crash reports started coming in. While we were scanning for targets, an AI mod ran a script in the group FSM, which spawned new units. Adding new units conflicted with the target scanning, which scans through the list of units, and caused the game to crash.

For the vanilla base game without mods, this would have been fine, because we don’t have scripts causing such problems. But mods can do everything and anything, and we need to be very careful with what we do.

A very big part of the game’s frametime is simulation for objects (units, vehicles, buildings, etc.). There would be the biggest potential for multithreading, but these are also the hardest to solve. For one, there are scripts and Event Handlers all over the place, but also just the engine simulation has lots of interconnected parts. For example, a unit taking an item out of a box; we cannot have multiple units doing that at the same time. We would need to separate simulation into safe and unsafe parts.

For AI simulation that was relatively simple. The heaviest parts are easy to spot and easy to extract. For object simulation, there are generally no heavy parts to take out. Simulation of one object is quite fast, but the problem comes from having to simulate thousands of them. And while it’s fast, simulation is also very big: thousands of lines of code big, organically grown over the last 20 years, with little or no consideration for multithreading back in the early days. Even if we miss just one line of code in there that ends up executing scripts, or interacts with some other object, we run the risk of crashing the game.

While it would be possible to unravel it all, we also have to be realistic in that we have limited resources allocated to Arma 3 at this stage. Doing all of this costs time. We focused on the big chunks at first. AI was relatively simple to get relatively big gains from. Simulation is very complex, and expected to yield comparatively small gains.

We will continue working on this, but with the biggest and easiest fixes already taken care of, we are only left with small, risky and complex things. And we also have to balance the time investment with everything else that needs doing. We can either give some players one or two more FPS, or we can spend the time on adding cool features like the web browser UI or Steam Game Recording integration.

Due to not everything being able to be multithreaded, the performance impact of what we did now will also vary per situation and especially with mods loaded. Our improvements to AI and rendering will not have much impact on a script-heavy mod. We try to provide improvements to some script commands, and are always looking for hints as to which ones need some more work, but we cannot fix the overall cost of trying to run many scripts.

WHY NOW?

There has been a steady effort of modernizing our RV engine over the last few years.

Back in 2020, we used to build Arma 3 with Visual Studio 2013 and the C++14 standard. Since then, we’ve upgraded all our code and libraries to VS 2022 and C++23. Upgrading over a million lines of code through nine years of language evolution did take a lot of time, especially as we were doing it on the side in parallel with all other development. Plus, we had to make sure we didn’t break anything while doing it. We did break quite a few things along the way, but thanks to everyone participating on the Profiling branch, we were able to find and fix most of them quickly.

Upgrading our multithreading code is just a part of that effort, and we are still not done.

- Dedmen